Quicklink: https://api.a2e.ai

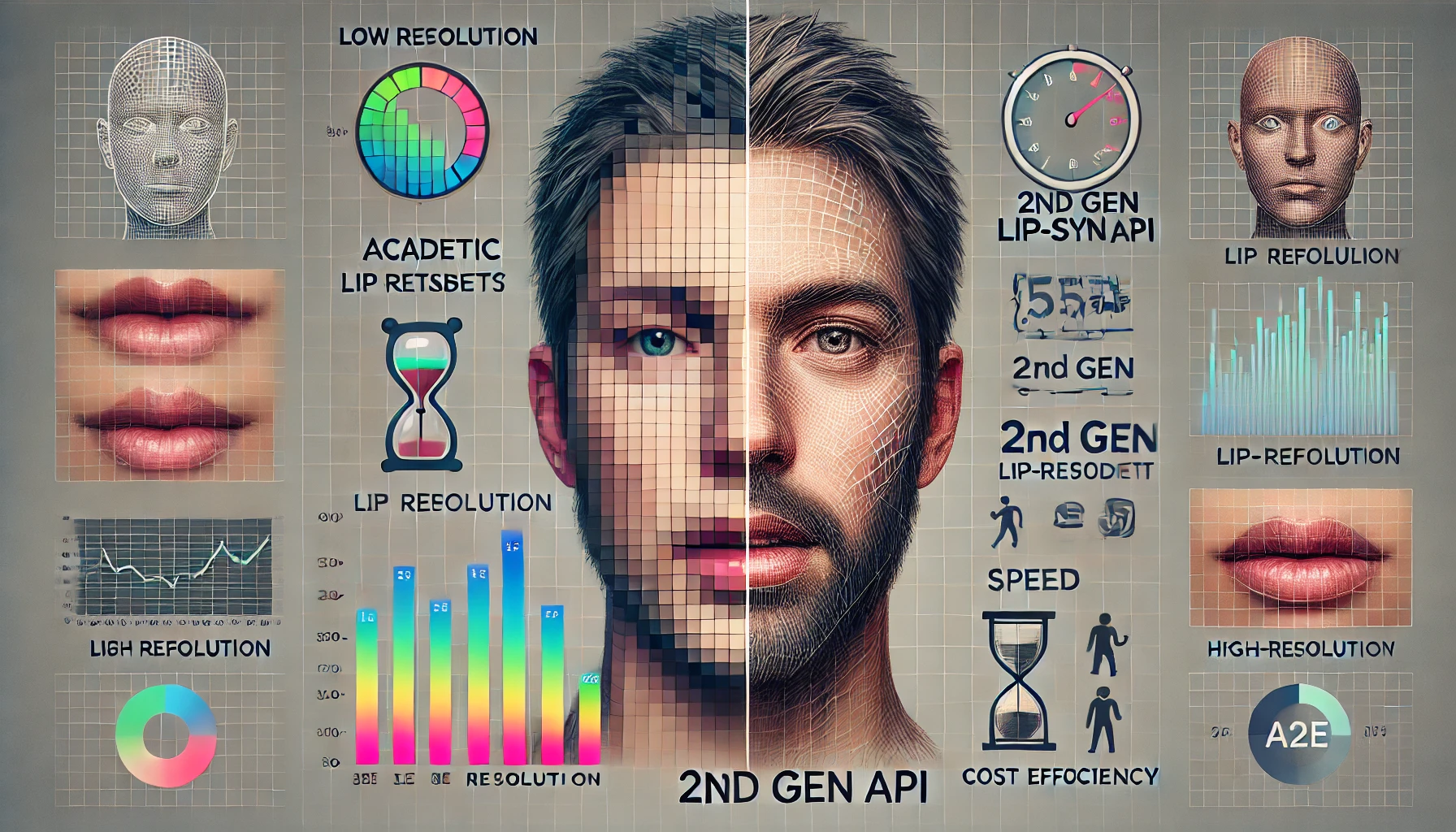

Lip-sync technology leverages advanced AI algorithms to synchronize the lip movements of a digital avatar with any arbitrary audio input. The process begins with a video clip of a person and an audio segment. The output is a video where the person’s lip movements are seamlessly aligned with the new audio.

The pursuit of high-quality lip-sync has been an ongoing research challenge. Notable contributions such as Wav2Lip, Video Re-Talking, DINet, and SadTalker have demonstrated impressive results under specific conditions. However, achieving consistently high-quality results across diverse scenarios remains a significant hurdle. The crux of the issue lies in the scarcity of training data. While datasets comprising thousands of individuals are considered significant in academia, they are insufficient for the complexities encountered in real-world applications.

Moreover, the computational demands of lip-sync algorithms pose another barrier to widespread adoption. Open-source solutions typically operate at a 1:10 real-time rate, meaning that it takes approximately 10 GPU minutes to synthesize just one minute of AI-generated video. Considering that cloud servers with GPU capabilities can cost around $1,000, this translates to roughly $0.23 per minute of synthesized video—a prohibitively high expense for many applications.

Another challenge is the resolution of the output video. Open-source algorithms typically produce low-resolution outputs, often around 256×256 pixels. In contrast, modern applications require much higher resolutions, typically 512×512 pixels or greater, especially for the mouth region. To meet these requirements, many solutions incorporate image enhancement modules like GPEN after the lip-sync process, further increasing computational costs and processing time.

A2E is excited to announce the release of our 2nd Gen Lip-Sync API, which brings significant innovations to the field. Our advancements are threefold:

- Comprehensive High-Resolution Dataset: We have curated a dataset containing approximately 10,000 distinct individuals captured in high-resolution video formats—10 times larger than those typically used in academic research.

- Optimized Neural Network Architecture: We have developed a novel lip-sync neural network with substantially improved inference speed. Our approach reduces the computational cost to just 20% of what is required by conventional open-source algorithms.

- High-Resolution Output Without Post-Processing: Our innovative neural network is capable of generating high-resolution video directly, eliminating the need for additional image enhancement modules.

With these innovations, A2E offers developers a highly cost-effective and quality-focused solution for lip-sync. In many cases, our API is not only more affordable than deploying open-source lip-sync algorithms in the cloud but also produces video outputs of superior quality and resolution.

Explore this breakthrough at https://api.a2e.ai. Developers can access our API through a free tier that includes two personal avatars and 100 seconds of video synthesis. Additional credits can be purchased starting at just $9.90.