At A2E.AI, protecting children is not just a legal obligation—it’s a core value that shapes every aspect of our platform. This post explains what CSAM is, how we actively combat it, and what you can do to help.

What is CSAM?

Child Sexual Abuse Material (CSAM)—formerly referred to as “child pornography”—encompasses any visual depiction of sexually explicit conduct involving a minor (anyone under 18 years of age). This includes photographs, videos, digital images, and computer-generated imagery that depicts minors engaged in sexual activity or in a sexually suggestive manner.

The term “CSAM” is now preferred by child safety organizations and law enforcement because it more accurately reflects the abusive nature of this content. These are not simply “images”—they represent real crimes committed against real children, and each viewing perpetuates that abuse.

Why AI Platforms Must Take CSAM Seriously

The emergence of generative AI has created new challenges in the fight against CSAM. AI tools can potentially be misused to:

- Generate synthetic CSAM depicting fictional minors

- Modify existing images of real children into explicit content

- Create “deepfake” videos involving minors

- De-age adults to appear as minors in explicit content

As an AI video generation platform, A2E.AI recognizes our unique responsibility to prevent our technology from being weaponized against children.

How A2E.AI Combats CSAM

We’ve implemented a multi-layered defense system designed to prevent, detect, and report any potential CSAM on our platform. Our approach combines cutting-edge AI technology with established industry best practices.

1. Minor Age Detection System

Our platform employs advanced AI-powered age estimation technology that analyzes both input images and generated outputs. This system:

- Scans all uploaded content before processing to identify any images that may contain minors

- Monitors generation requests for prompts or parameters that could result in content depicting minors in inappropriate contexts

- Reviews generated outputs to ensure no content depicting minors in explicit or suggestive scenarios is produced

- Blocks and flags any content that triggers our detection thresholds for immediate review

Our age detection models are continuously trained and updated to maintain high accuracy across diverse demographics while minimizing false positives.

2. Hash Database Matching for Known CSAM

A2E.AI integrates with established hash databases that contain digital “fingerprints” of known CSAM content. This technology allows us to:

- Instantly identify previously identified CSAM without human reviewers needing to view the content

- Block uploads of known illegal material in real-time

- Report matches to appropriate authorities as required by law

These hash-matching systems use technologies similar to Microsoft’s PhotoDNA and other industry-standard solutions used by major platforms worldwide. Even if an image is slightly modified, cropped, or resized, modern perceptual hashing can still identify matches to known CSAM.

3. Content Policy and User Accountability

Beyond technological safeguards, we maintain strict policies:

- Zero-tolerance policy: Any user found attempting to generate, upload, or distribute CSAM faces immediate and permanent account termination

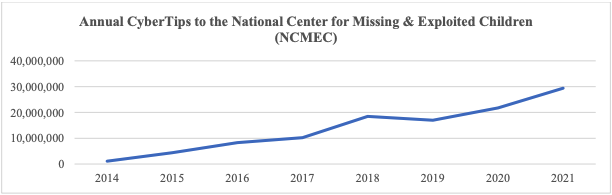

- Mandatory reporting: We report all apparent CSAM to the National Center for Missing & Exploited Children (NCMEC) as required by federal law

- Law enforcement cooperation: We fully cooperate with law enforcement investigations and preserve relevant evidence

- Human review: Flagged content undergoes review by trained trust and safety personnel

4. Proactive Monitoring and Continuous Improvement

Child safety is not a one-time implementation—it requires ongoing vigilance:

- Regular audits of our detection systems

- Participation in industry working groups focused on AI safety

- Updates to our models as new evasion techniques emerge

- Investment in research and development for improved detection methods

Global Laws Against CSAM

The United States has some of the world’s strongest laws against CSAM, but this is a global issue addressed by legislation in countries around the world. Here’s an overview of how different regions approach this critical issue:

United States

Federal laws governing CSAM include:

- 18 U.S.C. § 2251 – Sexual exploitation of children (production)

- 18 U.S.C. § 2252 – Distribution, receipt, and possession of CSAM

- 18 U.S.C. § 2252A – Covers computer-generated/AI-generated CSAM

- PROTECT Act (2003) – Explicitly criminalizes virtual/AI-generated child pornography

- EARN IT Act (2020/2022) – Increases platform accountability for CSAM

Penalties include mandatory minimum sentences of 15-30 years for production and 5-20 years for distribution.

European Union

The EU addresses CSAM through:

- Directive 2011/93/EU – Harmonizes CSAM laws across member states, including virtual depictions

- Digital Services Act (DSA) – Requires platforms to have mechanisms to detect and remove illegal content including CSAM

- Proposed CSAM Regulation (2022) – Would require platforms to detect and report CSAM (currently under debate)

United Kingdom

- Protection of Children Act 1978 – Criminalizes indecent photographs of children

- Coroners and Justice Act 2009 – Explicitly covers non-photographic (including AI-generated) images

- Online Safety Act 2023 – Requires platforms to proactively prevent CSAM

Canada

- Criminal Code Section 163.1 – Comprehensive CSAM laws including “written material” and computer-generated imagery

- Mandatory reporting requirements for internet service providers

Australia

- Criminal Code Act 1995 – Federal CSAM offenses including computer-generated material

- Online Safety Act 2021 – Grants eSafety Commissioner power to remove content and hold platforms accountable

Japan

- Act on Punishment of Activities Relating to Child Prostitution and Child Pornography (1999, amended 2014) – Criminalizes possession and distribution

- Note: Japan has faced international criticism for weaker regulations on illustrated/animated depictions

International Frameworks

- UN Convention on the Rights of the Child, Optional Protocol – Requires signatories to criminalize CSAM

- Budapest Convention on Cybercrime – International framework for cooperation on cybercrime including CSAM

- WeProtect Global Alliance – International coalition coordinating global response to online child exploitation

How to Report CSAM

If you encounter CSAM anywhere online—including on A2E.AI or any other platform—reporting it is crucial. Your report could help rescue a child from ongoing abuse. Here’s how to report:

In the United States

National Center for Missing & Exploited Children (NCMEC)

- Website: CyberTipline.org

- This is the centralized reporting system for all CSAM in the U.S.

- Reports are forwarded to appropriate law enforcement agencies

FBI

- Website: tips.fbi.gov

- For reporting serious cases or if you have information about producers/distributors

International Reporting

Internet Watch Foundation (IWF) – UK and international

- Website: iwf.org.uk

Canadian Centre for Child Protection

- Website: Cybertip.ca

Australian eSafety Commissioner

- Website: eSafety.gov.au

INHOPE – Global network of hotlines

- Website: inhope.org

- Find your country’s reporting hotline

Report Directly to A2E.AI

If you encounter any concerning content on our platform:

- Email: contact@a2e.ai

- We investigate all reports within 24 hours and report confirmed CSAM to NCMEC

Important Reporting Guidelines

- Do not download, screenshot, or share CSAM—even to report it. Simply report the URL or location.

- Do not attempt to investigate or contact suspected offenders

- Do provide as much context as possible in your report

- Do report even if you’re unsure—let trained professionals make the determination

Our Ongoing Commitment

At A2E.AI, we believe that the power of AI should be used to create, inspire, and innovate—never to harm. Child safety is woven into the foundation of our platform, not added as an afterthought.

We are committed to:

- Continuously improving our detection capabilities

- Maintaining transparency about our safety measures

- Collaborating with law enforcement and child safety organizations

- Supporting industry-wide efforts to combat AI-generated CSAM

If you have questions about our safety measures or suggestions for improvement, please contact us at contact@a2e.ai.

Together, we can ensure AI technology remains a force for good.